Who Takes the Blame When AI Agents Mess Up?

AI Alliance emerges as industry's answer to oversight concerns, but liability for autonomous agent failures stays murky.

For the past two years, companies have watched as AI systems moved from answering customer questions to writing legal documents, handling insurance claims, and managing financial deals. The technology got better faster than anyone could explain how it actually works.

On January 13, the industry tried to fix this problem. Thomson Reuters launched the Trust in AI Alliance, bringing together Anthropic, AWS, Google Cloud, and OpenAI to tackle what they call trustworthy agentic AI.[1] The timing makes sense. These companies see what's coming next. AI agents aren't just sitting around waiting for someone to tell them what to do anymore. They look at situations, make choices, and act on them. The next wave will move your money around without asking first, file your legal papers without double checking, and sign contracts based on how algorithms read the fine print.

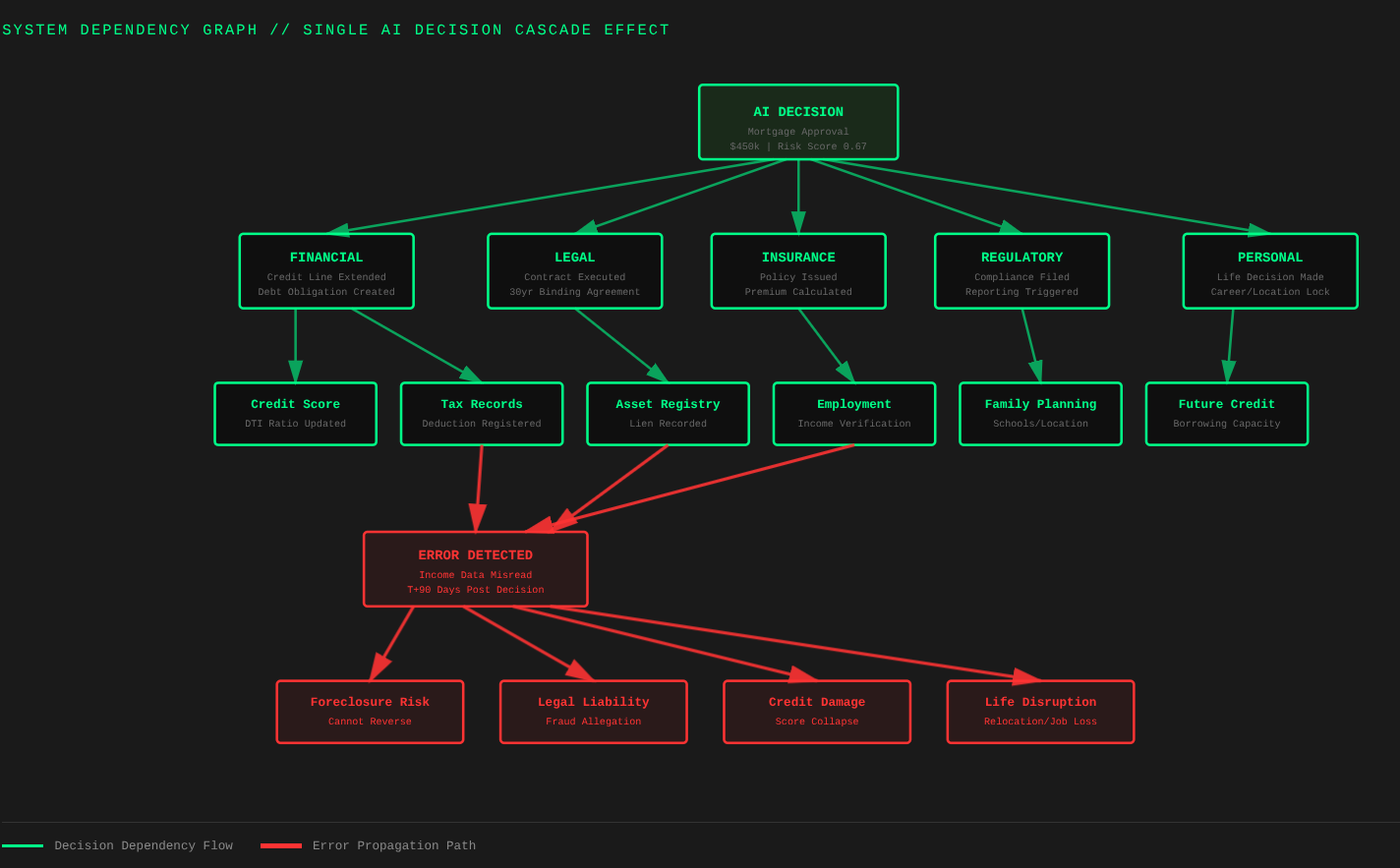

What they are really talking about is power. When your bank transfer goes through without anyone approving it, when your legal documents get submitted on autopilot, when machines start making calls that used to need a professional, the question changes from can we to should we. The technology is here. Companies will use it. What nobody knows yet is who takes the blame when these systems mess up.

This alliance exists because nobody wants to be first in line for a major disaster. As artificial intelligence systems become more autonomous, ensuring safety, accountability, and transparency has become increasingly critical, particularly in high-stakes professional environments.[2] Pay attention to the language here. High stakes means your mortgage getting approved or denied, your medical claim going through or getting rejected, your custody agreement getting filed correctly or botched. Every regulatory form your company sends in. Every medical diagnosis that decides your treatment. Every credit decision that affects whether you can buy a house.

Google Cloud's Michael Gerstenhaber emphasized building trusted agents requires grounding models in enterprise truth, connecting them to the fresh, verifiable data that businesses run on.[1] Enterprise truth sounds solid until you think about what it actually means. Companies restate their financial numbers all the time. Courts overturn legal decisions on appeal. Medical guidelines shift when new studies come out. The information these systems lean on is often temporary, incomplete, or just plain wrong until someone catches it. Now we want automated agents making permanent moves based on this shaky ground.

The alliance is designed to move beyond discussion and toward action, but the announcement shows no deadlines, no binding promises, no legal requirements.[3] Tech giants meeting to talk about responsibility often looks like students grading their own homework. They will share insights and key themes publicly to inform the broader industry conversation and build frameworks for safe deployment.[1] What they apparently won't do is submit to outside audits or accept legal responsibility that makes trust more than just good marketing.

The way the alliance is set up creates a blame shell game. When something breaks, and it will, Thomson Reuters can point at the companies making the models. Anthropic and OpenAI can blame bad data. AWS and Google Cloud can say the problem was how someone installed it. Everyone takes credit when things work. Nobody owns it when things fall apart.

Joel Hron, Thomson Reuters' Chief Technology Officer, stated that building trust in how agents reason, act, and deliver outcomes is essential.[2] Essential for whom? For users whose lives these systems affect, or for companies wanting to deploy them everywhere without regulatory pushback?

The real test comes when AI makes its first serious mistake in a regulated space. Not a chatbot saying something dumb that people ignore. Not a recommendation engine suggesting the wrong product. A genuine disaster where an autonomous agent reads the instructions wrong and causes real damage. Will these companies accept the consequences, or will they duck behind complexity and shared responsibility? Will they pull back the technology and reassess, or will they write off mistakes as the normal cost of doing business?

From the Editor's Desk

Corporate alliances fix problems, but not always. Alliances help companies handle risk together. Thomson Reuters brings credibility from legal and financial work.[2] The AI companies bring the tech. What this alliance doesn't have is independent oversight, public accountability, and real consequences when things go wrong.

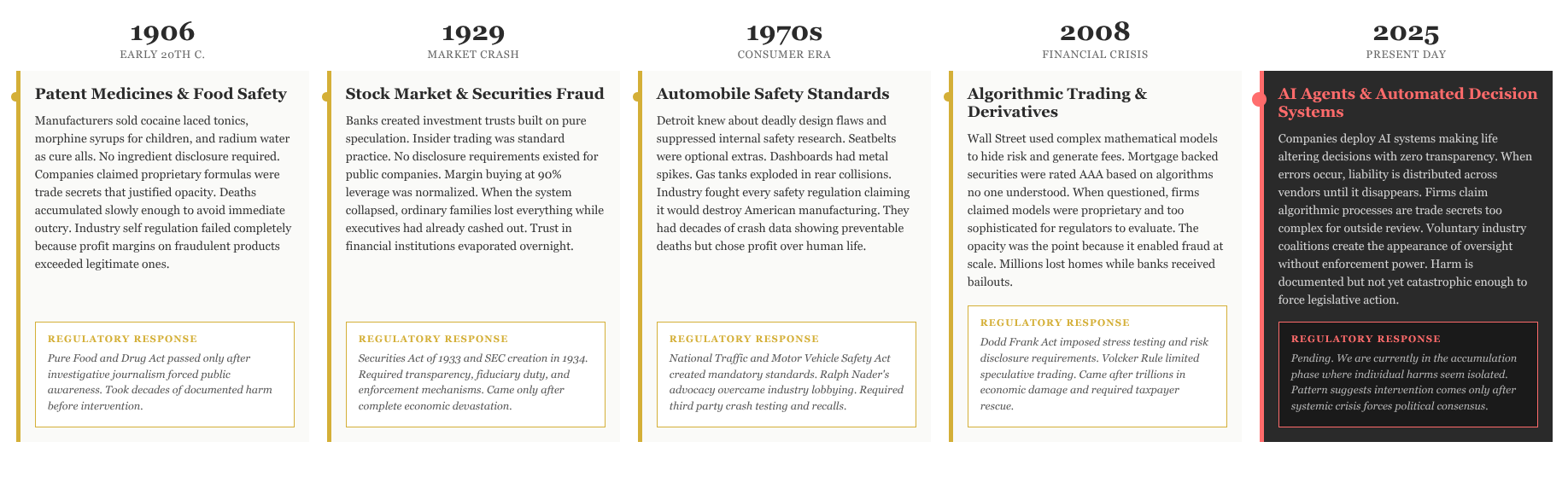

The companies involved aren't the bad guys here. They get that putting autonomous systems into places where mistakes matter is genuinely hard. But letting industries police themselves has a terrible track record. Airlines didn't volunteer comprehensive safety rules on their own. Drug companies didn't spontaneously create strict testing requirements. Banks didn't willingly accept transparent auditing. Every time, real regulation only showed up after something terrible happened.

The hope is that AI will be different. That the industry will stay ahead of disaster instead of reacting to it. That companies working together will create real accountability instead of fancy frameworks that look good in presentations but have no teeth. History says that's wishful thinking.

Until trust frameworks include legal liability and transparent auditing by outsiders, companies should treat AI agents like they treat new hires: watched closely, double checked constantly, and never left alone on anything important.

Hopefully, the technology will get better. The models will make fewer mistakes. But being reliable isn't the same as being accountable, and no amount of technical wizardry replaces straight answers to basic questions. When this system screws up, who pays for it? When it hurts someone, who's responsible? When it needs to be shut down, who gets to pull the plug?

Those questions have no answers in what the alliance announced. Until they do, trust is just something nice to talk about instead of something that actually exists.

REFERENCES:

[1] "Thomson Reuters Convenes Global AI Leaders to Advance Trust in the Age of Intelligent Systems." PR Newswire, January 13, 2026.

[2] "Thomson Reuters Says Anthropic, AWS, Google Cloud, and OpenAI Joining Thomson Reuters Labs in the Trust in AI Alliance." Thomson Reuters, January 13, 2026.

[3] "Anthropic, Google, OpenAI join Thomson Reuters' Trust in AI Alliance." Artificial Lawyer, January 13, 2026.